Introduction

To get started with Machine Learning in Qlik, you will need some basic understanding of scripting and data modelling. Overall, the scripts will not be super complicated if you have been developing QlikView or Qlik Sense applications for some years (and with that I mean developing from scratch, not only expressions and interface design). However, you do not need any previous knowledge in statistics or Machine Learning to understand most of it. If you want to go further and understand why the code works, you will certainly need some math skills and a little bit of knowledge about statistics.

Machine Learning and Artificial Intelligence?

Even though there are already thousands of articles on the topic of Machine Learning and Artificial Intelligence, I will start by sharing a high level picture of these disciplines. Artificial Intelligence can be divided in three areas:

Artificial Narrow Intelligence

Artificial Narrow Intelligence, also known as Weak AI, is “goal oriented” and solves a single given task. It is the only AI currently available.

Artificial General Intelligence

AGI or Strong Intelligence will be able to solve “any” task. Some scientists question if AGI will ever truly exist and whether if it will take us 20 or 1,000 years to get there. Clearly, it won’t be developed in Qlik.

Artificial Super Intelligence

This kind of AI is way beyond human intelligence and surpasses our understanding. You probably guessed it right… this AI won’t be developed in Qlik either.

Machine Learning

Machine Learning is one of the main areas in Artificial Narrow Intelligence. Other branches are Virtual Assistants and Expert Systems. The theoretical idea to develop computer algorithms that improve automatically through experience goes way back in modern history. The first ideas were developed as early as 1950, but the real boost came 20 years ago, when computers became competent and cheap enough to run these algorithms with good results. However, as computers became faster and faster, the volume of data also increased. When working with massive volumes of information, it is impossible to look at each data point individually, so a new approach is required. Deep Learning is — in some way — Machine Learning for Big Data. Other algorithms like Artificial Neural Networks try to simulate how our brain works.

Machine Learning can be divided in three areas:

Supervised Learning

The algorithm is “trained” with data that already has an outcome. After the training is done, we can use it to “predict” the outcome on independent sets of data.

Supervised Learning itself is divided in two groups:

- Regression: Predicts the outcome of a given sample where the output is in the form of real values. Examples: Predict number of birds in a tree based on the type of tree and its size.

- Classification: Predicts the outcome of a given sample where the output is in the form of categories. Predict if a patient is at risk of getting diabetes (yes/no).

Examples: Linear Regression, Logistic Regression, CART, Naïve Bayes, KNN, Random Forests are examples of Supervised Learning Algorithms.

Unsupervised Learning

The purpose of the unsupervised algorithms is not to predict a result. It is rather used to find similarities and patterns in the data. It is often used as a step before using a supervised algorithm to “wash” and “categorize” the data. In other words, it makes sure that you only use data that is relevant for the algorithm.

Unsupervised learning splits in three types:

- Association: Calculate the probability of a datapoint co-existing with another datapoint. A popular example is basket analysis: If a customer purchases a raincoat, he is 80% likely to also purchase an umbrella.

- Clustering: Groups datapoints in clusters based on how similar they are.

- Dimensionality Reduction: Reduces the number of variables in a dataset while ensuring that important information is still conveyed. Remove features that are not relevant for the selected purpose. These algorithms are great assistants in the Feature Engineering work.

Examples: Apriori, K-means and PCA.

Reinforcement Learning

In reinforcement learning, the algorithm is constantly fed with new information. It also takes the result of its own predictions into account when doing new predictions.

Examples: Traffic Light Control systems and agents in computer games.

Qlik + Machine Learning Series

With this article, we start a new series of posts that will cover some of the most popular Machine Learning algorithms, going from Linear / Logistical Regressions and Decision Trees to Deep Learning and Artificial Neural Networks.

Example: Using linear gradient descent

Task: Predict the amount of an insurance claim given the number of claims.

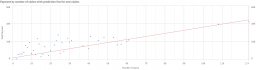

This chart contains data about a fictional car insurance company. It shows “total payment” by “number of claims”. Each dot represents a period in time like months or years.

Reference: Swedish Committee on Analysis of Risk Premium in Motor Insurance

Data Source: Auto Insurance in Sweden (download data here)

Data extract (first 10 rows):

- X = Number of Insurance Claims

- Y = Total Payment for all the insurance claims in thousands of Swedish Kronor

In this case, the insurance company wants to predict how much they will pay for the claims they received during the month. Let us assume that during the last period they received 36 claims in total. How much money should they reserve for those claims?

The goal for the linear gradient descent algorithm is to figure out the formula of a straight line that fits, in the best possible way, all the data points.

Manual prediction

Nothing is better than experimenting with data! Here is a Qlik Sense visualization where you can try to adjust the line by dragging the two sliding bars:

Adjust the values of [Slope start (θ0)] and [Slope angle (θ1)] and try to make a line that fits all dots. Then, with the help of your prediction line, answer the question: “How much money should the insurance company reserve for 36 claims?”

What is the right answer?

The red line in this chart is the result of a gradient descent algorithm. It came to the following conclusion:

- Slope start (θ0) = 19.79

- Slope angle (θ1) = 3.42

Perhaps you remember the equation for a straight line from school: y = kx + m.

Just replace it with this equation: “Payment Prediction” = “Slope start (θ0)” + “Slope angle (θ1)” * “Number of Claims”.

The answer would be: 19.79 + 3.42 * 36 ~ 142 SEK.

But, is that the correct answer? Well, in Machine Learning there is no “right answer”. These algorithms usually give you a “pretty ok” number! 😊 The more data it has to “train” on, the better it will predict. A key for making good predictions is to understand which algorithm to use and, of course, Linear Gradient Descent is not always the best choice!

A straight line will rarely fit your data perfectly, but it is much better than a wild guess. However, it is not a good idea to make a curve that matches all dots either (this is called Over Fitting and we will discuss it in another post).

If you are familiar with statistics, you can create a prediction line using simple linear regression. However, if the number of columns in your data increases, you will have to use a lot of “expensive mathematics” to build a prediction (works well, but requires a lot of computer power). This is where machine learning algorithms come handy. The gradient decent algorithm does not get more complex as the number of columns increases. It is a cheap and fast option for larger data sets!

Sample Script

Implementing a simple Gradient Descent Algorithm in Qlik Sense is not that difficult and there is no need to implement Python! In my next articles, I will explain this algorithm in detail, but here is a little sneak peak of what we will see, so stay tuned!

// LOAD TRAINING DATA

Training_table:

LOAD

num(num#(X,'',',')) as X,

num(num#(Y,'',',')) as Y

FROM [lib://AI_DATA/INSURANCE/data.txt]

(txt, codepage is 28591, embedded labels, delimiter is '\t', msq);

// GRADIENT DESCENT FUNCTION

// DEFINE HOW MANY TIMES TO LOOP

Let iterations= 15000;

// DEFINE LEARNING RATE

Let α = 0.001;

// DEFINE START VALUES FOR ALL THETAS (WEIGHTS).

Let θ0 = 0;Let θ1 = 0;

// GET HOW MANY ROWS TRAINING DATA HAS.

Let m = NoOfRows('Training_table');

// START LOOP

For i = 1 to iterations

// CREATE A SUMMARY TEMP TABLE

temp:

LOAD

Rangesum(($(θ0) + (X* $(θ1)) - Y),

peek(deviation_0)) as deviation_0,

Rangesum(($(θ0) + (X * $(θ1)) - Y) * X,

peek(deviation_X)) as deviation_X

Resident Training_table;

// GET THE LAST ROW FROM THE temp TABLE

// THAT HAS THE TOTAL SUM OF ALL ROWS

Let deviation_0 = Peek('deviation_0',-1,'temp');

Let deviation_X = Peek('deviation_X',-1,'temp');

// DROP THE temp TABLE. NO LONGER NEEDED

drop table temp;

// CHANGE THE VALUE OF EACH θ TOWARDS A BETTER θ

Let θ0 = θ0 - ( α * 1/m * deviation_0);

Let θ1 = θ1 - ( α * 1/m * deviation_X);

// REPEAT UNTIL ITERATIONS HAVE REACHED THE GIVEN MAX

next i;

3 Comments

Comments are closed.

Hi Robert

Many thanks for this.

I am very interested in how to apply AI & ML using the Qlik platform, realising that of course it will be very limited in what it can do!

Anyway I followed your instructions and the loop of 15000 iterations has resulted in Theta_00 being 19.98 and Theta_01 being 3.41 (so not far away from your initial suggestion using the straight line!)

I was just wondering how to use this calculation etc. in Qlik Sense to visualise the result?

So far I have used a Scatter Chart with The Number of Claims along the X axis, the Total Payment up the Y axis (just like yours) and I made the bubble size also the Total Payment (wasn’t sure what else I could use).

I then experimented with using the expression you mention: “Payment Prediction” = “Slope start (θ0)” + “Slope angle (θ1)” * “Number of Claims” as a reference line:

1st I tried it as a Y-axis reference line, and then I tried it as an X-axis reference line, but in both cases the line doesn’t appear. I don’t think a scatter chart can support a reference line which is a curve?

Plotting the expression in a table alongside the various Number of Claims and Total Payments records, and that does give values:

NumClaims Number of Claims Total Payment Prediction Line Equation ”

= vTheta_00 ” ”

= vTheta_01

”

124 124 422.2 443.33 19.98 3.41

108 108 392.5 388.70 19.98 3.41

41 82 254.7 319.92 19.98 3.41

23 69 209.5 295.52 19.98 3.41

61 61 217.6 228.24 19.98 3.41

60 60 202.4 224.83 19.98 3.41

29 58 237.2 237.98 19.98 3.41

57 57 170.9 214.58 19.98 3.41

55 55 162.8 207.76 19.98 3.41

53 53 244.6 200.93 19.98 3.41

13 52 230.5 257.47 19.98 3.41

24 48 272.8 203.84 19.98 3.41

48 48 248.1 183.86 19.98 3.41

45 45 214 173.62 19.98 3.41

40 40 119.4 156.55 19.98 3.41

37 37 152.8 146.30 19.98 3.41

11 33 102 172.62 19.98 3.41

31 31 209.8 125.82 19.98 3.41

30 30 194.5 122.41 19.98 3.41

14 28 173 135.56 19.98 3.41

9 27 188.2 152.13 19.98 3.41

27 27 92.6 112.16 19.98 3.41

26 26 187.5 108.75 19.98 3.41

25 25 69.2 105.34 19.98 3.41

22 22 161.5 95.09 19.98 3.41

7 21 154.2 131.65 19.98 3.41

20 20 98.1 88.27 19.98 3.41

19 19 46.2 84.85 19.98 3.41

6 18 80.3 121.41 19.98 3.41

17 17 142.1 78.02 19.98 3.41

8 16 131.7 94.59 19.98 3.41

16 16 59.6 74.61 19.98 3.41

15 15 32.1 71.19 19.98 3.41

4 12 62.5 100.92 19.98 3.41

12 12 58.1 60.95 19.98 3.41

10 10 65.3 54.12 19.98 3.41

5 10 61.2 74.11 19.98 3.41

3 9 57.5 90.68 19.98 3.41

2 2 6.6 26.81 19.98 3.41

0 0 19.98 19.98 3.41

Do you have any suggestions? Is there a follow up ML article?

Many thanks

Richard

Hi again

I have tried using a Combo chart today and actually don’t get the expected line curve result that you show but I do get a line that appears to be as smooth as it can be along the x-axis staying as close as possible to all points but not going out to touch extremes (high or low).

I’d like to show you an image but not sure how I can get an image to you?

Regards

Richard

Hi Richard, and thanks so much for your comments and questions. I will drop you a mail so that we can find a way to approach your questions. Best Regards, Robert